When you decide to learn Kubernetes there is always a question of where to find a non-expensive playground with full control to experiment. It should be easy to set up and shut down and provide you full control over it, and shouldn’t cost a fortune.

Through trial and error, I was able to find a solution that works perfectly for me.

To start we will need:

Terraform client,

Digitalocean account,

and… that’s it.

I would also recommend using a version control system.

Installing Terraform

Terraform is the tool to provision cloud providers. It is an opensource project maintained by Hashicorp. It is really easy to start using it. It supports plenty of cloud hosting providers (AWS, GCP, DigitalOcean, etc.) and SaaS services (GitHub, Datadog, sentry, etc.).

To install it just download a single binary file for your platform and move it to a directory included in your system’s PATH.

Registering Digitalocean account

I think I don’t have to teach you how to register. You can use my referral link to get a $100 registration bonus. That would be more than enough to experiment with Kube and decide if you want to continue using it. I obviously also will benefit from that (that’s why it is called “referral”).

After completing the registration process navigate to the API side menu item and create a new personal access token. Give it a memorable name (i.e. terraform) and grant with read/write permissions. Copy access token value after generation - you will need it at the next step.

Start coding

Now the most exciting part - create an empty directory for your project and open the editor.

Terraform provider configuration

Most files for Terraform have .tf extension. Let’s start with defining provider and access credentials for it - in this case, that would be Digitalocean. Create provider.tf file with the following content:

// provider.tf

provider "digitalocean" {

token = "<your access token>"

}

Is it that simple? Well, yes and no. This is the minimal provider declaration but the recommended way is to extract dynamic data like your API key to a variable, so you can change it without changing the code. Let’s make it right from the beginning and change file contents to:

// provider.tf

variable "do_token" {}

provider "digitalocean" {

token = var.do_token

}

The default values for the variables you can specify in a file named terraform.tfvars. And in this case in unlike the previous one the file name matters, so keep it.

// terraform.tfvars

do_token = "<your access token>"

Describing Kubernetes resources

That’s already enough to make terraform access Digitalocean API to create and destroy resources on your behalf. So let’s describe the first one to deploy the Kubernetes cluster.

// kube-cluster.tf

resource "digitalocean_kubernetes_cluster" "my_cluster" {

name = "my-cluster"

region = "fra1"

version = "1.16.2-do.3"

node_pool {

name = "worker-pool"

size = "s-1vcpu-2gb"

node_count = 2

}

}

In this resource definition, you provide all necessary information for terraform to create the cluster via Digitalocean API. It is important to understand the difference between my_cluster and my-cluster: the first name exists only in terraform codebase, you can use it to refer to the attributes of the resource. The second one is the name of a cluster given in Digitalocean, you will also see it in the administrative panel. They, of course, don’t have to look alike but in most cases it makes sense.

Region parameter defines the physical location of underlying infrastructure and version is the version of Kubernetes being deployed. The following parameters define type and quantity of nodes in the cluster.

You can get the list of available options for node sizes and Kubernetes versions by installing doctl command-line tool and executing the following commands:

$ doctl kubernetes options versions

Slug Kubernetes Version

1.16.2-do.3 1.16.2

1.15.5-do.3 1.15.5

1.14.8-do.3 1.14.8

$ doctl compute size list ]

Slug Memory VCPUs Disk Price Monthly Price Hourly

512mb 512 1 20 5.00 0.007440

s-1vcpu-1gb 1024 1 25 5.00 0.007440

1gb 1024 1 30 10.00 0.014880

s-1vcpu-2gb 2048 1 50 10.00 0.014880

s-1vcpu-3gb 3072 1 60 15.00 0.022320

s-2vcpu-2gb 2048 2 60 15.00 0.022320

s-3vcpu-1gb 1024 3 60 15.00 0.022320

2gb 2048 2 40 20.00 0.029760

s-2vcpu-4gb 4096 2 80 20.00 0.029760

...

Be aware that not all droplet sizes are possible to use as Kubernetes cluster node. In fact, s-1vcpu-2gb is the smallest and cheapest one.

The number of nodes is for your consideration: a minimum of 2 nodes is required to prevent downtime during upgrades or maintenance. If you don’t mind having downtimes (as it is not a production system) you can go with one. But having one node will not provide you real experience with containers and requests being balanced to different nodes. I recommend running at least 2 nodes, which I’ve used to estimate price.

Applying changes and validating the result

The short coding part is now over and we can proceed to the testing process. First, you have to initiate terraform project once to download the provider plugin. To do that execute terraform init in the project folder.

If there are no errors run terraform apply command and confirm changes by typing yes when prompted. It can take several minutes to complete but you will be provided with the feedback:

digitalocean_kubernetes_cluster.my_cluster: Creating...

digitalocean_kubernetes_cluster.my_cluster: Still creating... [10s elapsed]

digitalocean_kubernetes_cluster.my_cluster: Still creating... [20s elapsed]

...

digitalocean_kubernetes_cluster.my_cluster: Still creating... [6m40s elapsed]

digitalocean_kubernetes_cluster.my_cluster: Creation complete after 6m43s [id=1608898b-fdcf-411a-b8e2-0e2a7820539c]

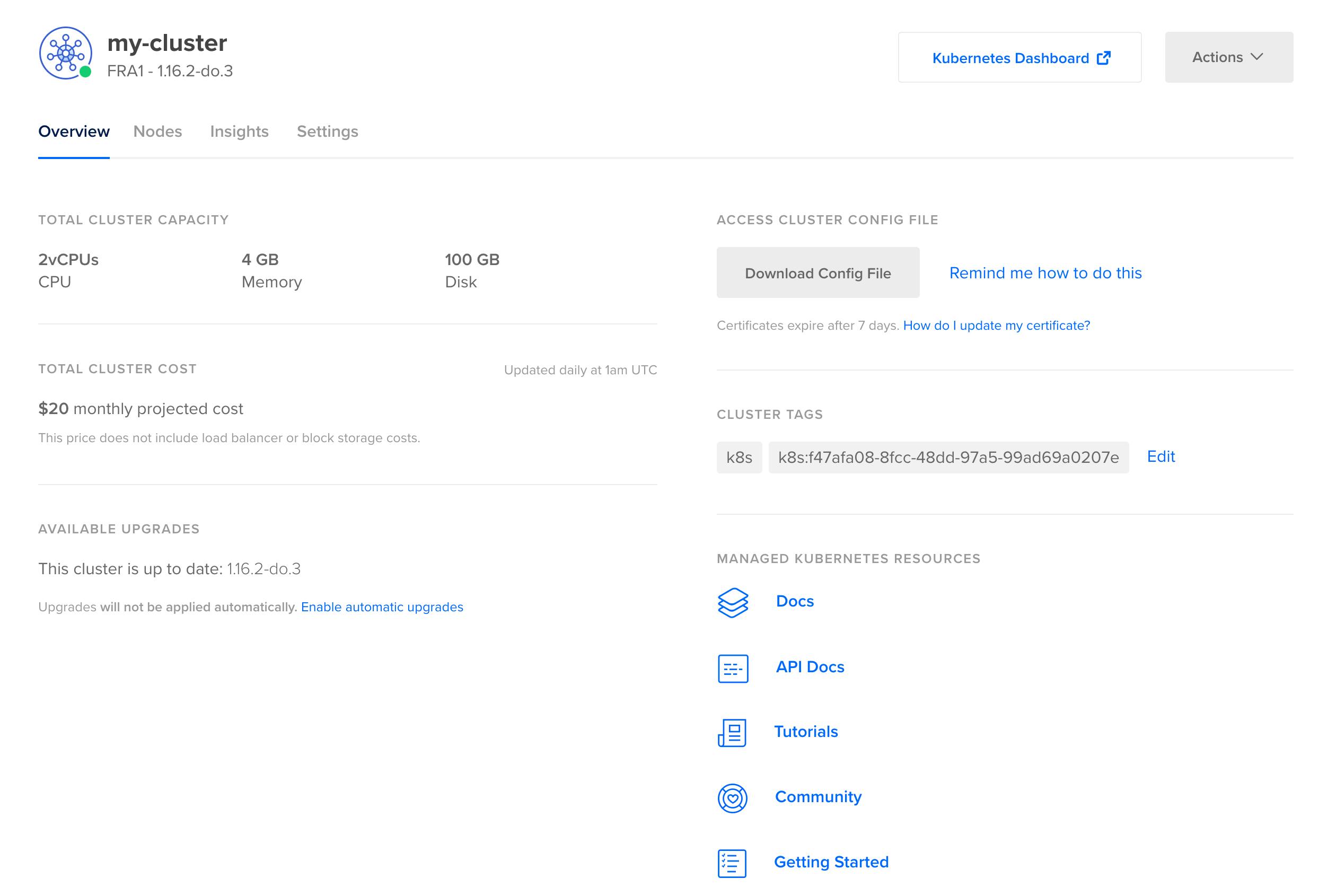

The cluster is created and we can see it in the administrative panel.

Digitalocean deploys some software to the cluster so you will not need to deploy it yourself. Kubernetes Dashboard is one of them. With the help of it, you can see deployed payloads, secrets, services, ingresses, etc. To access it, click on the link “Kubernetes Dashboard” in the cluster description page.

Terraform state file

After terraform started to communicate to Digitalocean API the new file is created in the folder terraform.tfstate, this file tracks state of the changes applied basically connecting your resource definitions to ids issued by Digitalocean for the created resources. These ids are used to update and delete resources.

To delete created cluster execute terraform destroy and confirm when prompted. Congrats! Now you can create and delete cluster with one command whenever you want to experiment with it.

Final touches

If you want to deliver an additional 10% for your codebase you can make sure it is formatted properly. Execute terraform fmt. It will have no output if all your files formatted correctly and output modified file names otherwise.

To guarantee your declaration to work in the future you can add explicit provider version definition, this will change the provider definition to following:

provider "digitalocean" {

version = "~> 1.12"

token = var.do_token

}

Make sure you haven’t broken any formatting with this by running fmt again.

Conclusion

These 18 lines of code are a small step for the developer, but it is a big step for your infrastructure marking the first steps to the infrastructure-as-code approach and making it extremely easy and fast to deploy it and clean it up with no UI interactions.